Adverse Action Notices and AI Underwriting: CFPB Weighs In

Yesterday the CFPB issued guidance on the use of artificial intelligence in loan underwriting, specifically on how to properly issue an Adverse Action Notice (AAN) when you use AI or complex credit models in your decisioning process.

To summarize: If a lender is using AI in decisioning, they must still be able to specifically and accurately state the principal reason for denial in an AAN.

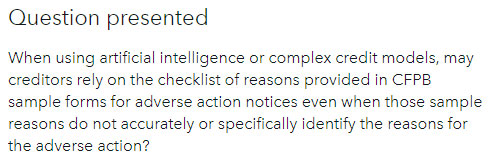

Question Presented

The Bureau's circular starts with a Question Presented:

The CFPB's answer is No. The reason for denial must specifically and accurately indicate the principal reason for the denial.

Background

When a member applies for credit and is denied, the Equal Credit Opportunity Act (ECOA) and Regulation B require that the credit union provide the member with AAN, which tells them why the loan was denied. The goal of the AAN is for consumers to understand why they were denied so they can take steps to improve that area, as well as preventing discrimination.

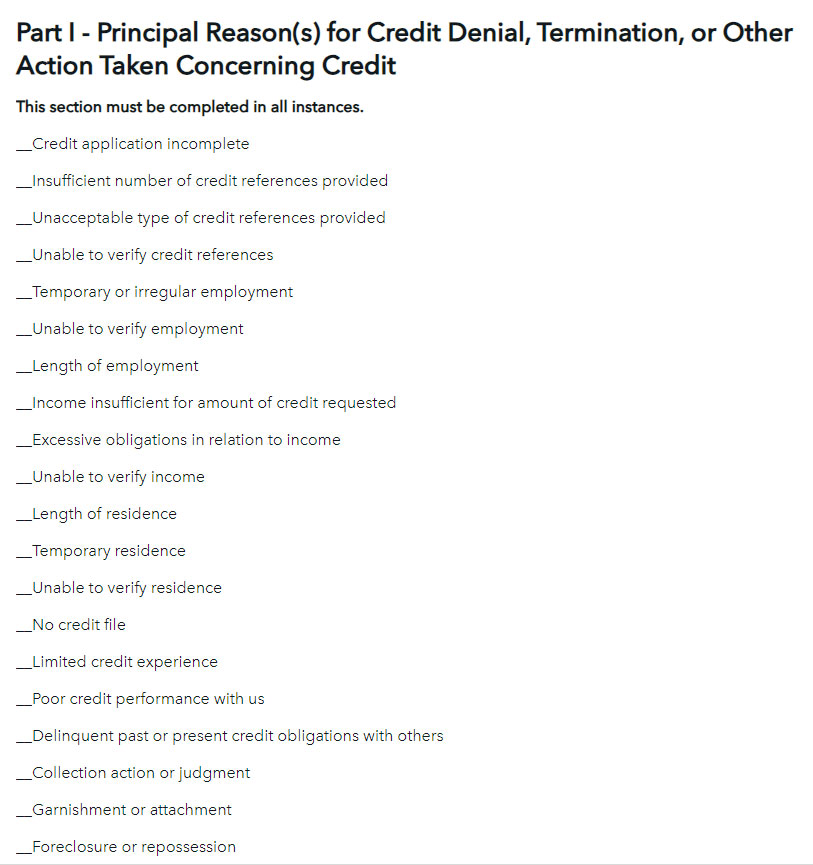

Reg B contains sample forms with a number of checkboxes creditors can use to give the denial reason. These are some of the more common reasons for denial, so the credit union can check the box or boxes that apply:

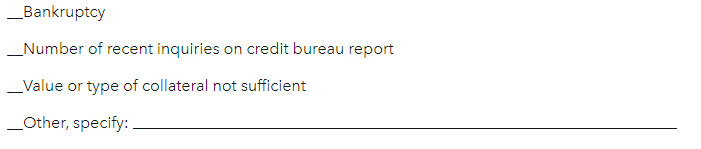

There's also an "Other" box with a blank, where the credit union can list a reason if none of the boxes apply:

The CFPB's guidance has been, and remains, that these check boxes are illustrative. The obligation is on the lender to be specific in why they are denying the loan. If one of these boxes applies, they can use them, but if they are not sufficient, the lender must either modify the form or use the "Other, specify" box and be specific in their reason given. Additionally, the reasons listed in the AAN must “relate to and accurately describe the factors actually considered or scored by a creditor.”

AI Models

In their discussion of AI decisioning models, the CFPB notes that these models may "sometimes rely on data that are harvested from consumer surveillance or data not typically found in a consumer’s credit file or credit application." In addition to noting the potential harm of this approach, CFPB discusses the risks of not being able to specifically and accurately fill out the AAN. Creditors may not simply check the closest box.

One problem with AI models, the Bureau writes, is that consumers may not anticipate that certain data outside of their credit file could be the primary reason for a credit denial. For example, an AI model might look at an applicant's chosen profession, project their income based on that profession, and conclude that their projected income will not be sufficient to pay back the loan in full. In this instance, "insufficient projected income" or "income insufficient for the amount of credit requested" would not meet the creditor's AAN obligations.

CFPB gives another example related to adjusting a credit amount based on a consumer's purchase history. An AI model could consider behavioral data, such as where they shop or what type of goods they buy, and recommend a credit line be closed or reduced. In this case, an AAN that lists "purchasing history" or "disfavored business patronage" as the principal reason for the decision would be insufficient. CFPB writes that more detail about the behavior that let to the decision would need to be disclosed.

Wait, do I need to give an AAN when I modify an existing credit line?

Yes - nested in at the bottom of this Circular, the CFPB cites an Advisory Opinion and states that the AAN requirements "extend to adverse actions taken in connection with existing credit accounts (i.e., an account termination or an unfavorable change in the terms of an account that does not affect all or substantially all of a class of the creditor’s accounts)."

They also note that AAN requirements apply equally to all credit decisions, regardless of the technology used to make them, and that as credit models evolve, lenders must ensure these models comply with consumer protection laws.

That's really the bottom line here: if you're using AI to help make lending decisions, you have to be able to understand the reasons the AI model is making its decision, because your legal obligation to communicate that to the borrower remains in place. In a world where AI models and algorithms are so often "black boxes" that even those involved don't fully understand how they work, it's critical for credit unions to make sure they don't run afoul of the ECOA and Reg B in deploying these technologies.

« Return to "REGular Blog" Go to main navigation